In this post I’ll discuss how to use Python and R to calculate the Pearson Chi-Squared Test for goodness of fit. The chi-squared test for goodness of fit determines how well categorical variables fit some distribution. We assume that the categories are mutually exclusive, and completely cover the sample space. That means that the everything we can think of fits into exactly one category, with no exceptions. For example, suppose we flip a coin to determine if it is fair. The outcomes of this experiment fit into exactly two categories, head and tails. The same goes for rolling a die to determine its fairness; rolls of the die will result in (exactly) one of (exactly) six outcomes or categories. This test is only meaningful with mutually exclusive categories.

A Simple Manual Example

Suppose we have two dice, a black die and a red die, and we’d like to determine if one or both are unfair. We roll each die sixty times and count how many times each comes up on each face:

| Face | Black | Red |

| 1 | 9 | 6 |

| 2 | 10 | 5 |

| 3 | 12 | 14 |

| 4 | 11 | 15 |

| 5 | 8 | 11 |

| 6 | 10 | 9 |

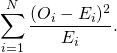

We can calculate the test statistic using the following formula,

(1)

Here, ![]() is the observed frequency, and

is the observed frequency, and ![]() is the expected frequency. Rolling a die sixty times, we would expect each face to come up about ten times, so we would write out,

is the expected frequency. Rolling a die sixty times, we would expect each face to come up about ten times, so we would write out,

![]()

We would take this test statistic, along with the degrees of freedom an calculate the ![]() -value, which represents the probability of a more extreme or more unlikely result occurring if this experiment were repeated many many times. So, degrees of freedom, lets get back to that. This is the trickiest part of the whole thing. In this example there are

-value, which represents the probability of a more extreme or more unlikely result occurring if this experiment were repeated many many times. So, degrees of freedom, lets get back to that. This is the trickiest part of the whole thing. In this example there are ![]() categories, and we can say that there are

categories, and we can say that there are ![]() degrees of freedom because if we know the counts for the faces 1 through 5 (9, 10, 12, 11, 8, respectively), and we know the total number of rolls (60), then we can infer the number of times the die came up with a 6. We can subtract the sum of the other counts from sixty to determine that a 6 came up

degrees of freedom because if we know the counts for the faces 1 through 5 (9, 10, 12, 11, 8, respectively), and we know the total number of rolls (60), then we can infer the number of times the die came up with a 6. We can subtract the sum of the other counts from sixty to determine that a 6 came up ![]() times.

times.

Usually, for situations like this, when we have ![]() categories, we will assume that the degrees of freedom,

categories, we will assume that the degrees of freedom, ![]() , is

, is ![]() . When we have a table instead of a list of counts, then the formula is

. When we have a table instead of a list of counts, then the formula is ![]() , where

, where ![]() is the number of rows in the table, and

is the number of rows in the table, and ![]() is the number of columns.

is the number of columns.

Code for the Dice Example

For R and Python, we can use the default arguments to solve this lickety-split. We’ll look at R first,

black <- c(9,10,12,11,8,10) ; red <- c(6,5,14,15,11,9) ; chisq.test( black )

Chi-squared test for given probabilities

data: black

X-squared = 1, df = 5, p-value = 0.9626

So, for the black die, there’s a 96% chance of seeing more extreme results in the long run. This is what we’d expect, since all the values are close to ten. For red, we’ll provide the observed values, but also the expected proportions.

expected.proportions <- c( 10, 10, 10, 10, 10, 10 ) / 60 chisq.test( red, p=expected.proportions )

Chi-squared test for given probabilities

data: red

X-squared = 8.4, df = 5, p-value = 0.1355

Here, we see that there’s only 13% chance of observing this result in the long run. It’s not a statistically result, but it’s not a sure bet either.

In Python we have a similar story,

import scipy.stats

black = [9,10,12,11,8,10]

red = [6,5,14,15,11,9]

chi2, p = scipy.stats.chisquare( black )

msg = "Test Statistic: {}\np-value: {}"

print( msg.format( chi2, p ) )

Test Statistic: 1.0 p-value: 0.962565773247

If we want to explicitly specify the distribution of counts, then we can do that too,

expected_counts = [10,10,10,10,10,10]

chi2, p = scipy.stats.chisquare( red )

msg = "Test Statistic: {}\np-value: {}"

print( msg.format( chi2, p ) )

Test Statistic: 8.4 p-value: 0.135525223378

This agrees with the results from R. The difference is that R expects proportions instead of counts, and that you have to pass the proportions explicitly to the p= argument. Both languages calculate the degrees of freedom as ![]() where

where ![]() is the number of categories, or rather, the length of the input.

is the number of categories, or rather, the length of the input.

A Tabular Example

Suppose we look at the House of Representatives for the 113th Congress. This data is taken from www.senate.gov.

| Republican | Democrat | Totals | |

| M | 215 | 143 | 358 |

| F | 19 | 64 | 83 |

| Totals | 234 | 207 | 441 |

So, we can basically eyeball this thing and say that it doesn’t square up with what we’d expect from a population that is roughly fifty percent female.

Code for the Congressional Example

Using R, we can create a table and dimensions using the following code. This is loosely borrowed from the documentation page for the chisq.test() function.

house <- as.table( rbind( c(215,143), c(19,64) ) ) ;

dimnames( house ) <- list( gender=c('M','F'), party=c('Rep','Dem') ) ;

chisq.test( house )

Pearson's Chi-squared test with Yates' continuity correction

data: house

X-squared = 35.8878, df = 1, p-value = 2.09e-09

In Python, we use the scipy.stats.chi2_contingency() function.

house = [ [ 215, 143 ], [ 19, 64 ] ]

chi2, p, ddof, expected = scipy.stats.chi2_contingency( house )

msg = "Test Statistic: {}\np-value: {}\nDegrees of Freedom: {}\n"

print( msg.format( chi2, p, ddof ) )

print( expected )

Test Statistic: 35.8877686481 p-value: 2.09016744218e-09 Degrees of Freedom: 1 [[ 189.95918367 168.04081633] [ 44.04081633 38.95918367]]

Here, the table below the values is what the function predicted the expected counts to be, based on the marginals of the table. Like the R function, this automatically use the Yates’ correction for continuity.

This is the worst article I have read.

This article does not explain anything. It shows the author know bit of python and lacks any capability to draw conclusions on the result!!!