In this post I will use Python to explore more measures of fit for linear regression. I will consider the coefficient of determination (R2), hypothesis tests (![]() ,

, ![]() , Omnibus), AIC, BIC, and other measures. This will be an expansion of a previous post where I discussed how to assess linear models in R, via the IPython notebook, by looking at the residual, and several measures involving the leverage.

, Omnibus), AIC, BIC, and other measures. This will be an expansion of a previous post where I discussed how to assess linear models in R, via the IPython notebook, by looking at the residual, and several measures involving the leverage.

First we will look at a small data set from DASL library, regarding the correlation between tobacco and alcohol purchases in different regions of the United Kingdom. The interesting feature of this data set is that Northern Ireland is reported as an outlier. Notwithstanding, we will use this data set to describe two tools for calculating a linear regression. We will alternatively use the statsmodels and sklearn modules for caluclating the linear regression, while using pandas for data management, and matplotlib for plotting. To begin, we will import the modules,

import numpy as np import pandas from pandas import DataFrame, Series import statsmodels.formula.api as sm from sklearn.linear_model import LinearRegression import scipy, scipy.stats

Data

I copied the data from here and pasted it between a pair of triple quotes in the IPython Notebook, as so,

data_str = '''Region Alcohol Tobacco North 6.47 4.03 Yorkshire 6.13 3.76 Northeast 6.19 3.77 East Midlands 4.89 3.34 West Midlands 5.63 3.47 East Anglia 4.52 2.92 Southeast 5.89 3.20 Southwest 4.79 2.71 Wales 5.27 3.53 Scotland 6.08 4.51 Northern Ireland 4.02 4.56'''

Each line ends in a newline, and each datum is delimited by a tab, so we first split the string over the newlines, and then split each new datum using the tabs, like this,

d = data_str.split('n')

d = [ i.split('t') for i in d ]

Next, we make sure any numbers register as numbers, while leaving the strings for the regions alone.

for i in range( len( d ) ):

for j in range( len( d[0] ) ):

try:

d[i][j] = float( d[i][j] )

except:

pass

Finally, we wrap this data in a pandas DataFrame. The neat thing about a DataFrame, is that it lets you access whole variables by keyword, like a dictionary or hash, individual elements by position, as in an array, or through SQL-like logical expressions, like a database. Furthermore, it has great support for dates, missing values, and plotting.

df = DataFrame( d[1:], columns=d[0] )

We give the DataFrame two arguments, the data, and then labels for the columns, taken from the first row of our list, d. This will allow us to refer to the column containing alcohol data as df.Alcohol. Note the similarity to R, where we would refer to it as df$Alcohol. This convention makes it easier to read the code at a later date.

Now that we have our data loaded, before we do anything else, let’s plot our data and see what it looks like.

scatter( df.Tobacco, df.Alcohol,

marker='o',

edgecolor='b',

facecolor='none',

alpha=0.5 )

xlabel('Tobacco')

ylabel('Alcohol')

savefig('alcohol_v_tobacco.png', fmt='png', dpi=100)

We notice that there seems to be a linear trend, and one outlier, which corresponds to North Ireland.

Regression Using Pandas and Statsmodels

To perform ordinary least squares regression on the alcohol consumption as a function of tobacco consumption, we enter the following code. Note that we are excluding the last datum, which refers to the outlying North Ireland data. We’ll give an example of the data with that outlier later; for now, we will focus on the “cleaner” data.

df['Eins'] = np.ones(( len(df), )) Y = df.Alcohol[:-1] X = df[['Tobacco','Eins']][:-1] result = sm.OLS( Y, X ).fit() result.summary()

Since we want a linear model that looks like ![]() , we need to add an extra array or vector of ones to our independent variable,

, we need to add an extra array or vector of ones to our independent variable, df.Tobacco because the statsmodels OLS() function does not assume that we would like a constant or intercept intercept term, ![]() . This is not so uncommon as it would seem; several regression packages make this requirement. The

. This is not so uncommon as it would seem; several regression packages make this requirement. The summary() method produces the following human-readable output.

OLS Regression Results

| Dep. Variable: | Alcohol | R-squared: | 0.615 |

|---|---|---|---|

| Model: | OLS | Adj. R-squared: | 0.567 |

| Method: | Least Squares | F-statistic: | 12.78 |

| Date: | Tue, 11 Feb 2014 | Prob (F-statistic): | 0.00723 |

| Time: | 21:05:17 | Log-Likelihood: | -4.9998 |

| No. Observations: | 10 | AIC: | 14.00 |

| Df Residuals: | 8 | BIC: | 14.60 |

| Df Model: | 1 |

| coef | std err | t | P>|t| | [95.0% Conf. Int.] | |

|---|---|---|---|---|---|

| x1 | 1.0059 | 0.281 | 3.576 | 0.007 | 0.357 1.655 |

| const | 2.0412 | 1.001 | 2.038 | 0.076 | -0.268 4.350 |

| Omnibus: | 2.542 | Durbin-Watson: | 1.975 |

|---|---|---|---|

| Prob(Omnibus): | 0.281 | Jarque-Bera (JB): | 0.904 |

| Skew: | -0.014 | Prob(JB): | 0.636 |

| Kurtosis: | 1.527 | Cond. No. | 27.2 |

And now we have a very nice table of mostly meaningless numbers. I’ll go through and explain each one. The left column of the first table is mostly self explanatory. The degrees of freedom of the model are the number of predictor, or explanatory variables. The degrees of freedom of the residuals is the number of observations minus the degrees of freedom of the model, minus one.

Most of the values listed in the summary are available via the result object. For instance, the R2 value is obtained by result.rsquared. If you are using IPython, you may type results. and hit the TAB key, and a list of attributes for the results object will drop down.

R2

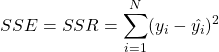

The ![]() term is the coefficient of determination and it usually reflects how well the model fits the observed data. The coefficient of determination is usually given by,

term is the coefficient of determination and it usually reflects how well the model fits the observed data. The coefficient of determination is usually given by,

(1)

(2)

(3) ![]()

Where ![]() is an observed response,

is an observed response, ![]() is the mean of the observed responses,

is the mean of the observed responses, ![]() is a prediction of the response made by the linear model, and

is a prediction of the response made by the linear model, and ![]() is the residual, i.e., the difference between the response and the prediction. Also,

is the residual, i.e., the difference between the response and the prediction. Also, ![]() is called the sum of the squared error, or

is called the sum of the squared error, or ![]() the sum of the squared residuals, and

the sum of the squared residuals, and ![]() is called the total sum of squares.

is called the total sum of squares.

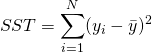

Adjusted R2

As you incorporate more predictor variables then ![]() typically increases because you’re trying to map a much larger input space onto a single scalar prediction. This is known as the Curse of Dimensionality. (Dunh duh DUUUUNH!) The adjusted

typically increases because you’re trying to map a much larger input space onto a single scalar prediction. This is known as the Curse of Dimensionality. (Dunh duh DUUUUNH!) The adjusted ![]() takes into account the number of predictor variables (the degrees of freedom) and number of observations. Let

takes into account the number of predictor variables (the degrees of freedom) and number of observations. Let ![]() be the number of observations, and

be the number of observations, and ![]() be the number of predictors, then the adjusted

be the number of predictors, then the adjusted ![]() is given by,

is given by,

(4)

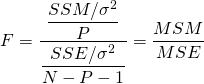

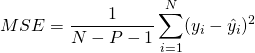

F-statistic

N.B. In the following discussion of ![]() -tests and

-tests and ![]() -tests, please bear in mind that squinting over a p-values at

-tests, please bear in mind that squinting over a p-values at ![]() significance levels is silly, because your model is built upon simplifying and inaccurate assumptions. Hypothesis testing should guide your decision making, not dictate it.

significance levels is silly, because your model is built upon simplifying and inaccurate assumptions. Hypothesis testing should guide your decision making, not dictate it.

That being said, the null hypothesis of the ![]() -test is that the data can be modeled accurately by setting the regression coefficients to zero. The alternative hypothesis is that at least one of the regression coefficients should be non-zero. If the

-test is that the data can be modeled accurately by setting the regression coefficients to zero. The alternative hypothesis is that at least one of the regression coefficients should be non-zero. If the ![]() -distribution provides a p-value that is lower than some threshold

-distribution provides a p-value that is lower than some threshold ![]() , then we reject the null hypothesis, and and say that our model is, in fact, “doing something with its life.” The

, then we reject the null hypothesis, and and say that our model is, in fact, “doing something with its life.” The ![]() statistic is computed as the ratio of two

statistic is computed as the ratio of two ![]() distributed variables, discussed below.

distributed variables, discussed below.

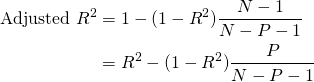

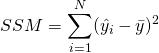

We will introduce another sum of squares term, the model sum of squares, or the explained sum of squares,

(5)

We will state without further explanation that ![]() is a

is a ![]() random variable with

random variable with ![]() degrees of freedom, where

degrees of freedom, where ![]() is the number of predictor variables. Likewise,

is the number of predictor variables. Likewise, ![]() is a

is a ![]() random variable with

random variable with ![]() degrees of freedom, where

degrees of freedom, where ![]() is the number of observations. We subtract

is the number of observations. We subtract ![]() from

from ![]() because we would like to subtract the number or predictor variables plus the constant term, from the number of observations. In both cases,

because we would like to subtract the number or predictor variables plus the constant term, from the number of observations. In both cases, ![]() is the variance of the response variable. Furthermore, if

is the variance of the response variable. Furthermore, if ![]() is a

is a ![]() distribution with

distribution with ![]() degrees of freedom, and

degrees of freedom, and ![]() is a

is a ![]() distribution with

distribution with ![]() degrees of freedom, then

degrees of freedom, then

(6) ![]()

is an ![]() distribution with

distribution with ![]() degrees of freedom. Putting this together, where

degrees of freedom. Putting this together, where ![]() , and

, and ![]() , we obtain,

, we obtain,

(7)

(8)

(9)

So now we have a nice ![]() -statistic, and we can use its degrees of freedom,

-statistic, and we can use its degrees of freedom, ![]() and

and ![]() , to determine the probability of observing the calculated

, to determine the probability of observing the calculated ![]() -statistic. So, we can check this in Python by saying,

-statistic. So, we can check this in Python by saying,

N = result.nobs

P = result.df_model

dfn, dfd = P, N - P - 1

F = result.mse_model / result.mse_resid

p = 1.0 - scipy.stats.f.cdf(F,dfn,dfd)

print 'F-statistic: {:.3f}, p-value: {:.5f}'.format( F, p )

F-statistic: 12.785, p-value: 0.00723

Here, scipy.stats.f.cdf( F, m, n ) returns the cumulative sum of the ![]() -distribution with shape parameters

-distribution with shape parameters m = P = 1, and n = N - P - 1 = 8, up to the ![]() statistic

statistic F. Subtracting this quantity from one, we obtain the probability in the tail, which represents the probability of observing ![]() statistics more extreme than the one observed.

statistics more extreme than the one observed.

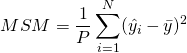

Log-Likelihood

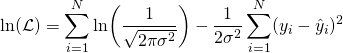

If we have several possible models, and we assume that the errors for each of the models are normally distributed about zero, then we can write the likelihood function for a single model as,

(10)

We can simplify and expedite the computation by caluclating the logarithm, instead.

(11)

We can calculate this in Python as follows.

N = result.nobs SSR = result.ssr s2 = SSR / N L = ( 1.0/np.sqrt(2*np.pi*s2) ) ** N * np.exp( -SSR/(s2*2.0) ) print 'ln(L) =', np.log( L )

ln(L) = -4.9997586973859809

Here, result.ssr is the sum of the squared residuals, and that dividing this by the number of observations, N, we obtain the sample variance ![]()

s2.

AIC and BIC

The Akaike information criterion (AIC) and the Bayesian information criterion (BIC) are based on the log-likelihood described in the previous section. Both measures introduce a penalty for model complexity, but the AIC penalizes complexity less severely than the BIC. The AIC and BIC are given by,

(12) ![]()

(13) ![]()

Here, ![]() is the number of observations,

is the number of observations, ![]() is the number of parameters, and

is the number of parameters, and ![]() is the log-likelihood. We have two parameters in this example, the slope and intercept. The AIC is a relative estimate of information loss between different models. The BIC was initially proposed using a Bayesian argument, and is not related to ideas of information. Both measures are only used when trying to decide between different models. So, if you have one regression for alcohol sales based on cigarette sales, and another model for alcohol consumption that incorporated cigarette sales and lighter sales, then you would be inclined to choose the model that had the lower AIC or BIC value.

is the log-likelihood. We have two parameters in this example, the slope and intercept. The AIC is a relative estimate of information loss between different models. The BIC was initially proposed using a Bayesian argument, and is not related to ideas of information. Both measures are only used when trying to decide between different models. So, if you have one regression for alcohol sales based on cigarette sales, and another model for alcohol consumption that incorporated cigarette sales and lighter sales, then you would be inclined to choose the model that had the lower AIC or BIC value.

Coefficients

The coefficients or weights of the linear regression are contained in the attribute params, and returned as a pandas Series object, since we used a pandas DataFrame as input. This is nice, because the coefficients are named for convenience.

result.params

x1 1.005896 const 2.041223 dtype: float64

We can obtain this directly by computing,

(14) ![]()

Here, ![]() is the matrix of predictor variables as columns, with an extra column of ones for the constant term,

is the matrix of predictor variables as columns, with an extra column of ones for the constant term, ![]() is the column vector of the response variable, and

is the column vector of the response variable, and ![]() is the column vector of coefficients corresponding to the columns of

is the column vector of coefficients corresponding to the columns of ![]() .

.

Standard Error

we will calculate the covariance-variance matrix, also called the covariance matrix, for the estimated coefficients ![]() of the predictor variables using the following formula,

of the predictor variables using the following formula,

(15) ![]()

Here, ![]() is the variance, or the MSE–the mean squared error of the residuals. The standard errors are the square roots of the elements on the main diagonal of this covariance matrix. We can perform the operation above, and calculate the element-wise square root using the following Python code,

is the variance, or the MSE–the mean squared error of the residuals. The standard errors are the square roots of the elements on the main diagonal of this covariance matrix. We can perform the operation above, and calculate the element-wise square root using the following Python code,

X = df.Tobacco[:-1] # add a column of ones for the constant intercept term X = np.vstack(( X, np.ones( X.size ) )) # convert the NumPy arrray to matrix X = np.matrix( X ) # perform the matrix multiplication, # and then take the inverse C = np.linalg.inv( X * X.T ) # multiply by the MSE of the residual C *= result.mse_resid # take the square root SE = np.sqrt(C) print SE

[[ 0.28132158 nan] [ nan 1.00136021]]

t-statistic

We use the ![]() -test to test the null hypothesis that the coefficient of a given predictor variable is zero, implying that a given predictor has no appreciable effect on the response variable. The alternative hypothesis is that the predictor does contribute to the response. In testing we set some threshold,

-test to test the null hypothesis that the coefficient of a given predictor variable is zero, implying that a given predictor has no appreciable effect on the response variable. The alternative hypothesis is that the predictor does contribute to the response. In testing we set some threshold, ![]() , and if

, and if ![]() , then we reject the null hypothesis at our threshold

, then we reject the null hypothesis at our threshold ![]() , otherwise we fail to reject the null hypothesis. The

, otherwise we fail to reject the null hypothesis. The ![]() -test generally allows us to evaluate the importance of different predictors, assuming that the residuals of the model are normally distributed about zero. If the residuals do not behave in this manner, then that suggests that there is some non-linearity between the variables, and that their

-test generally allows us to evaluate the importance of different predictors, assuming that the residuals of the model are normally distributed about zero. If the residuals do not behave in this manner, then that suggests that there is some non-linearity between the variables, and that their ![]() -tests should not be used to asses the importance of individual predictors. Furthermore, it might be best to try to modify the model so that the residuals do tend the cluster normally about zero.

-tests should not be used to asses the importance of individual predictors. Furthermore, it might be best to try to modify the model so that the residuals do tend the cluster normally about zero.

The ![]() statistic is given by the ratio of the coefficient (or factor) of the predictor variable of interest, and its corresponding standard error. If

statistic is given by the ratio of the coefficient (or factor) of the predictor variable of interest, and its corresponding standard error. If ![]() is the vector of coefficients or factors of our predictor variables, and

is the vector of coefficients or factors of our predictor variables, and ![]() is our standard error, then the

is our standard error, then the ![]() statistic is given by,

statistic is given by,

(16) ![]()

So, for the first factor, corresponding to the slope in our example, we have the following code,

i = 0 beta = result.params[i] se = SE[i,i] t = beta / SE print 't =', t

t = 3.5756084542390316

Once we have a ![]() statistic, we can (sort of) calculate the probability of observing a statistic at least as extreme as what we’ve already observed, given our assumptions about the normality of our errors by using the code,

statistic, we can (sort of) calculate the probability of observing a statistic at least as extreme as what we’ve already observed, given our assumptions about the normality of our errors by using the code,

N = result.nobs P = result.df_model dof = N - P - 1 hp = 1.0 - scipy.stats.t( dof ).cdf( t ) p = hp * 2.0

Here, dof are the degrees of freedom, which should be eight, which is the number of observations, N, minus the number of parameters, which is two. The CDF is the cumulative sum of the PDF. We’re interested in the area under the right hand tail, beyond our ![]() statistic,

statistic, t, so we subtract the sumulative sum up to that statistic from one in order to obtain the tail probability on the other side. We then multiply this tail probability by two to obtain a two-tailed probability.

Confidence Interval

The confidence interval is built using the standard error, the p-value from our ![]() -test, and a critical value from a

-test, and a critical value from a ![]() -test having

-test having ![]() degrees of freedom, where

degrees of freedom, where ![]() is the number of observations and

is the number of observations and ![]() is the number of model parameters, i.e., the number of predictor variables. The confidence interval is the the range of values we’d expect to find the parameter of interest, based on what we’ve observed. You will note that we have a confidence interval for predictor variable coefficient, and the constant term. A smaller confidence interval suggests that we are confident about the value of the estimated coefficient, or constant term. A larger confidence interval suggests that there is more uncertainty or variance in the estimated term. Again, let me reiterate that hypothesis testing is only one perspective. Furthermore, it is a perspective that was developed in the late nineteenth and early twentieth centuries when data sets were generally smaller and more expensive to gather, and data scientists were using books of logarithm tables for arithmetic.

is the number of model parameters, i.e., the number of predictor variables. The confidence interval is the the range of values we’d expect to find the parameter of interest, based on what we’ve observed. You will note that we have a confidence interval for predictor variable coefficient, and the constant term. A smaller confidence interval suggests that we are confident about the value of the estimated coefficient, or constant term. A larger confidence interval suggests that there is more uncertainty or variance in the estimated term. Again, let me reiterate that hypothesis testing is only one perspective. Furthermore, it is a perspective that was developed in the late nineteenth and early twentieth centuries when data sets were generally smaller and more expensive to gather, and data scientists were using books of logarithm tables for arithmetic.

The confidence interval is given by,

(17) ![]()

Here, ![]() is one of the estimated coefficients,

is one of the estimated coefficients, ![]() is a critical-value, which is the

is a critical-value, which is the ![]() -statistic required to obtain a probability less than the

-statistic required to obtain a probability less than the ![]() significance level, and

significance level, and ![]() is the standard error. The critical value is calculated using the inverse of the cumulative distribution function. (The cumulative distribution function is the cumulative sum of the probability distribution.) In code, the confidence interval using a

is the standard error. The critical value is calculated using the inverse of the cumulative distribution function. (The cumulative distribution function is the cumulative sum of the probability distribution.) In code, the confidence interval using a ![]() -distribution looks like,

-distribution looks like,

i = 0 # the estimated coefficient, and its variance beta, c = result.params[i], SE[i,i] # critical value of the t-statistic N = result.nobs P = result.df_model dof = N - P - 1 z = scipy.stats.t( dof ).ppf(0.975) # the confidence interval print beta - z * c, beta + z * c

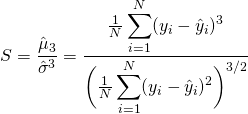

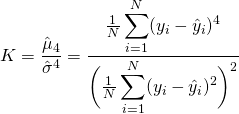

Skewness and Kurtosis

Skew and kurtosis refer to the shape of a (normal) distribution. Skewness is a measure of the asymmetry of a distribution, and kurtosis is a measure of its curvature, specifically how peaked the curve is. These values are calculated as,

(18)

(19)

The ![]() and

and ![]() are the third and fourth central moments, which are beyond the present scope of this post. One possible Python implementation would be,

are the third and fourth central moments, which are beyond the present scope of this post. One possible Python implementation would be,

d = Y - result.fittedvalues

S = np.mean( d**3.0 ) / np.mean( d**2.0 )**(3.0/2.0)

K = np.mean( d**4.0 ) / np.mean( d**2.0 )**(4.0/2.0)

print 'Skewness: {:.3f}, Kurtosis: {:.3f}'.format( S, K )

Skewness: -0.014, Kurtosis: 1.527

Omnibus Test

The Omnibus test uses skewness and kurtosis to test the null hypothesis that a distribution is normal. In this case, we’re looking at the distribution of the residual. If we obtain a very small value for ![]() , then the residuals are not normally distributed about zero, and we should maybe look at our model more closely. The

, then the residuals are not normally distributed about zero, and we should maybe look at our model more closely. The statsmodels OLS function uses the scipy.stats.normaltest() function. If you’re interested, the K2 test developed by D’Agostino, D’Agostino Jr., and Belanger 1, with a correction added by Royston 2, is presented below, which is adapted from a random Stata manual I found 3. It’s a real booger.

def Z1( s, n ):

Y = s * np.sqrt( ( ( n + 1 )*( n + 3 ) ) / ( 6.0 * ( n - 2.0 ) ) )

b = 3.0 * ( n**2.0 + 27.0*n - 70 )*( n + 1.0 )*( n + 3.0 )

b /= ( n - 2.0 )*( n + 5.0 )*( n + 7.0 )*( n + 9.0 )

W2 = - 1.0 + np.sqrt( 2.0 * ( b - 1.0 ) )

alpha = np.sqrt( 2.0 / ( W2 - 1.0 ) )

z = 1.0 / np.sqrt( np.log( np.sqrt( W2 ) ) )

z *= np.log( Y / alpha + np.sqrt( ( Y / alpha )**2.0 + 1.0 ) )

return z

def Z2( k, n ):

E = 3.0 * ( n - 1.0 ) / ( n + 1.0 )

v = 24.0 * n * ( n - 2.0 )*( n - 3.0 )

v /= ( n + 1.0 )**2.0*( n + 3.0 )*( n + 5.0 )

X = ( k - E ) / np.sqrt( v )

b = ( 6.0 * ( n**2.0 - 5.0*n + 2.0 ) ) / ( ( n + 7.0 )*( n + 9.0 ) )

b *= np.sqrt( ( 6.0 * ( n + 3.0 )*( n + 5.0 ) ) / ( n * ( n - 2.0 )*( n - 3.0 ) ) )

A = 6.0 + ( 8.0 / b )*( 2.0 / b + np.sqrt( 1.0 + 4.0 / b**2.0 ) )

z = ( 1.0 - 2.0 / A ) / ( 1.0 + X * np.sqrt( 2.0 / ( A - 4.0 ) ) )

z = ( 1.0 - 2.0 / ( 9.0 * A ) ) - z**(1.0/3.0)

z /= np.sqrt( 2.0 / ( 9.0 * A ) )

return z

K2 = Z1( S, N )**2.0 + Z2( K, N )**2.0

print 'Omnibus: {}'.format( K2)

Omnibus: 2.54189816906

The K2 statistic has an approximately ![]() distribution, with two degrees of freedom, so we can calculate a p-value by saying,

distribution, with two degrees of freedom, so we can calculate a p-value by saying,

p = 1.0 - scipy.stats.chi2(2).cdf( K2 )

print 'Pr( Omnibus ) = {}'.format( p )

Pr( Omnibus ) = 0.280565215271

Thus, if either the skewness or kurtosis suggests non-normality, this test should pick it up.

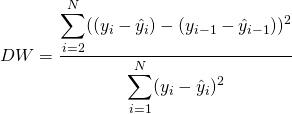

Durbin-Watson

The Durbin-Watson test checks for autocorrelation by looking at he residuals separated by some lag; here the lag is one.

(20)

DW = np.sum( np.diff( result.resid.values )**2.0 ) / result.ssr

print 'Durbin-Watson: {:.5f}'.format( DW )

Durbin-Watson: 1.97535

The Durbin-Watson statistic is approximately equal to ![]() , where

, where ![]() is the sample autocorrelation. The statistic ranges from zero to four, and a value around two suggests that there is no autocorrelation. Values greater than two suggest negative correlation, and values less that one suggest positive correlation.

is the sample autocorrelation. The statistic ranges from zero to four, and a value around two suggests that there is no autocorrelation. Values greater than two suggest negative correlation, and values less that one suggest positive correlation.

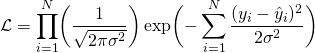

Jarque-Bera Test

The Jarque-Bera test is another test that considers skewness (S), and kurtosis (K). The null hypothesis is that the distribution is normal, that both the skewness and excess kurtosis equal zero, or alternatively, that the skewness is zero and the regular run-of-the-mill kurtosis is three. Unfortunately, with small samples the Jarque-Bera test is prone rejecting the null hypothesis–that the distribution is normal–when it is in fact true.

(21) ![]()

We can calculate the ![]() -statistic as follows,

-statistic as follows,

JB = (N/6.0) * ( S**2.0 + (1.0/4.0)*( K - 3.0 )**2.0 )

Calculating the probability using the ![]() distribution with two degrees of freedom we have,

distribution with two degrees of freedom we have,

p = 1.0 - scipy.stats.chi2(2).cdf(JB)

print 'JB-statistic: {:.5f}, p-value: {:.5f}'.format( JB, p )

JB-statistic: 0.90421, p-value: 0.63629

Condition Number

The condition number measures the sensitivity of a function’s output to its input. When two predictor variables are highly correlated, which is called multicolinearity, the coefficients or factors of those predictor variables can fluctuate erratically for small changes in the data, or the model. Ideally, similar models should be similar, i.e., have approximately equal coefficients. Multicolinearity can cause numerical matrix inversion to crap out, or produce inaccurate results. One approach to this problem in regression is the technique of ridge regression, which is available in the sklearn Python module.

We calculate the condition number by taking the eigenvalues of the product of the predictor variables (including the constant vector of ones) and then taking the square root of the ratio of the largest eigenvalue to the least eigenvlaue. If the condition number is greater than thirty, then the regression may have multicolinearity.

X = np.matrix( X ) EV = np.linalg.eig( X.T * X ) print EV

[ 136.51527115 0.18412885]

Note that X.T * X should be ![]() , where

, where ![]() is the number of degrees of freedom of the model (the number of predictors) and the

is the number of degrees of freedom of the model (the number of predictors) and the ![]() represents the addition of the constant vector of ones for the intercept term. In our case, the product should be a

represents the addition of the constant vector of ones for the intercept term. In our case, the product should be a ![]() matrix, so we’ll have two eigenvalues. Then our condition number is given by,

matrix, so we’ll have two eigenvalues. Then our condition number is given by,

CN = np.sqrt( EV.max() / EV.min() )

print 'Condition No.: {:.5f}'.format( CN )

Condition No.: 27.22887

Our condition number is juuust below 30, so we can sort of sleep okay.

Comparison

Now that we have seen an example of linear regression with a reasonable degree of linearity, compare that with an example of one with a significant outlier. In practice, outliers should be understood before they are discarded, because they might turn out to be very important. They might signify a new trend, or some possibly catastrophic event.

X = df[['Tobacco','Eins']] Y = df.Alcohol result = sm.OLS( Y, X ).fit() result.summary()

OLS Regression Results with Outliers

| Dep. Variable: | Alcohol | R-squared: | 0.050 |

|---|---|---|---|

| Model: | OLS | Adj. R-squared: | -0.056 |

| Method: | Least Squares | F-statistic: | 0.4735 |

| Date: | Tue, 18 Feb 2014 | Prob (F-statistic): | 0.509 |

| Time: | 01:09:35 | Log-Likelihood: | -12.317 |

| No. Observations: | 11 | AIC: | 28.63 |

| Df Residuals: | 9 | BIC: | 29.43 |

| Df Model: | 1 |

| coef | std err | t | P>|t| | [95.0% Conf. Int.] | |

|---|---|---|---|---|---|

| Tobacco | 0.3019 | 0.439 | 0.688 | 0.509 | -0.691 1.295 |

| Eins | 4.3512 | 1.607 | 2.708 | 0.024 | 0.717 7.986 |

| Omnibus: | 3.123 | Durbin-Watson: | 1.655 |

|---|---|---|---|

| Prob(Omnibus): | 0.210 | Jarque-Bera (JB): | 1.397 |

| Skew: | -0.873 | Prob(JB): | 0.497 |

| Kurtosis: | 3.022 | Cond. No. | 25.5 |

Regression Using Sklearn

In order to use sklearn, we need to input our data in the form of vertical vectors. Whenever one slices off a column from a NumPy array, NumPy stops worrying whether it is a vertical or horizontal vector. MATLAB works differently, as it is primarily concerned with matrix operations. NumPy, however has a matrix class for whenever the verticallness or horizontalness of an array is important. Therefore, in our case, we’ll cast the DataFrame as a NumPy array, and then cast it as a Numpy matrix so that vertical arrays stay vertical once they are sliced off the data set.

data = np.matrix( df )

Next, we create the regression objects, and fit the data to them. In this case, we’ll consider a clean set, which will fit a linear regression better, which consists of the data for all of the regions except Northern Ireland, and an original set consisting of the original data

cln = LinearRegression()

org = LinearRegression()

X, Y = data[:,2], data[:,1]

cln.fit( X[:-1], y[:-1] )

org.fit( X, Y )

clean_score = '{0:.3f}'.format( cln.score( X[:-1], Y[:-1] ) )

original_score = '{0:.3f}'.format( org.score( X, Y ) )

The next piece of code produces a scatter plot of the regions, with all of the regions plotted as empty blue circles, except for Northern Ireland, which is depicted as a red x.

scatter( df.Tobacco[:-1], df.Alcohol[:-1],

marker='o', facecolors='none', edgecolors='b', s=20,

label='All other regions,  ='+clean_score )

scatter( df.Tobacco[-1:], df.Alcohol[-1:],

marker='x', color='r',

label='N. Ireland, outlier,

='+clean_score )

scatter( df.Tobacco[-1:], df.Alcohol[-1:],

marker='x', color='r',

label='N. Ireland, outlier,  ='+original_score )

='+original_score )

The next part generates a set of points from 2.5 to 4.85, and then predicts the response of those points using the linear regression object trained on the clean and original sets, respectively.

test = np.arange( 2.5, 4.85, 0.1 ) test = np.array( np.matrix( test ).T ) plot( test, cln.predict( test ), 'k' ) plot( test, org.predict( test ), 'k--' )

Finally, we limit and label the axes, add a title, overlay a grid, place the lengend at the bottom, and then save the figure.

xlabel('Tobacco') ; xlim(2.5,4.75)

ylabel('Alcohol') ; ylim(2.75,7.0)

title('Regression of Alcohol from Tobacco') ;

grid() ;

legend(loc='lower center')

savefig( 'alcohol_regressed_over_tobacco.png', fmt='PNG', dpi=100 )

Conclusion

Before you do anything, visualize your data. If your data is highly dimensional, then at least examine a few slices using boxplots. At the end of the day, use your own judgement about a model based on your knowledge of your domain. Statistical tests should guide your reasoning, but they shouldn’t dominate it. In most cases, your data will not align itself with the assumptions made by most of the available tests. Here is a very interesting article from Nature on classical hypothesis testing. A more intuitive approach to hypothesis testing is Bayesian analysis. I recommend John Kruschke’s book on the topic. It’s got puppy dogs on the cover, but it’s a clear and thorough, it comes with R code for all of the examples, and there is a very generous solution set available online for the rest of the problems. Other great free books on Python and stats and Bayesian methods are available at Green Tea Press.

-

D’Agostino, R. B., A. J. Belanger, and R. B. D’Agostino, Jr. 1990. A suggestion for using powerful and informative

tests of normality. American Statistician 44: 316–321. ↩ -

Royston, P. 1991. sg3.5: Comment on sg3.4 and an improved D’Agostino test. Stata Technical Bulletin 3: 23–24. Reprinted

in Stata Technical Bulletin Reprints, vol. 1, pp. 110–112. College Station, TX: Stata Press. ↩ - http://www.stata.com/manuals13/rsktest.pdf ↩

You wrote F=(SSM/sigama2/P) / (SSE/sigma2/(N-P-1)) = MSE/MSM

Isn’t it MSM/MSE?

You’re absolutely right, thanks! I’ll change that right away!

Great, thanks!

Thank you for the for the answer, It’s great guide, specially for me that I’m a R user and I want to learn Python for statistics.

I have another question: s2 (for the log-likelihood) is estimated through SSE/n, and the logL value match the one in the summary of ‘result’, but isn’t it better use the unbiased estimator of s2?

I love Python, and it is pretty great for most things, but I think R is still the best for statistics.

I admit that I do not know. My main objective was to be able to interpret and reproduce the output of Python and R linear modeling tools. I’ll look into this and try to get back to you about it. Thanks for your questions!

I’ve replicated the regression with R

Y <- c(6.47, 6.13, 6.19, 4.89, 5.63, 4.52, 5.89, 4.79, 5.27, 6.08)

X <- c(4.03, 3.76, 3.77, 3.34, 3.47, 2.92, 3.2 , 2.71, 3.53, 4.51)

res <- lm(Y ~ X)

it returns the unbiesed estimator of sigma (MSE**0.5):

res$sigma

but the log-likelihood with the biesed one (as same as Python)

logLik(res)

so I found in my books that SSE/N is the maximum-likelihood estimator for sigma^2, and so I think that’s why…

than I tried

AIC(res)

BIC(res)

…

That’s interesting. I was just reading about how biased estimates are not always a bad thing. Sometimes, like on this example, it lowers the MSE, and in other cases it can make the distribution median unbiased so that the median is preserved in monotonic nonlinear transformations. A monotonic transformation is one where if x < y, then T(x) < T(y) after the transformation.

Hello,

Under the sklearn section,

When I attempt

perform the matrix multiplication,

and then take the inverse

C = np.linalg.inv( X * X.T )

I get:

*** ValueError: operands could not be broadcast together with shapes (11,2) (2,11)

In fact I get this error just doing

Am I misunderstanding what you wrote, or was this your intention?

I assume your intention is to do a dot product, but you should note, that X * X.T will not do a dot product (at least not in the version 1.7.1 of numpy that I am working in).

In fact, unless X.shape[0] == X.shape[1], you will get an error if you try to do X * X.T –and if it does complete, it will only be doing element-wise multiplication within X and X.T, which is probably not what you want.

Perhaps you meant:

I’d really appreciate any thoughts you have on this. Thanks for any follow up.

If it helps, here is the exact X that I am working with:

X = np.random.rand(2)

for idx in range(10):

X = np.vstack( ( X, np.random.rand(2) ) )

X

np. array([[ 3.062, -3.0754],

[ 2.500, 4.2257],

[ -1.050, 0.00000],

[ 4.93, 3.9979],

[ -2.500, 3.30],

[ 5.562, 1.15],

[ -5.958, 1.01],

[ 3.625, -6.89],

[ -7.291, 2.745],

[ 3.062, -3.07],

[ 2.50, 4.22],

[ -1.05, 0.000],

[ 4.937, 3.997],

[ -2.500, 3.307],

[ 5.562, 1.150],

[ -5.958, 1.018]])

** Under the Standard Error Section… not the sklearn section…

Hi, thanks for your interest. You will need to cast X as a matrix, as in X = np.matrix( X ), before the matrix multiplication will work. When X is a NumPy array, it does multiplication element-wise by default.

Wow, just wow. Really comprehensive post! I learned A LOT. Personally, I am more into ANOVAs but this helped me understand statistics AND programming in a new way. Cool post.