In this post I will look at several techniques for assessing linear models in R, via the IPython Notebook interface. I find the notebook interface to be more convenient for development and debugging because it allows one to evaluate cells instead of going back and forth between a script and a terminal.

R in the IPython Notebook

If you do not have the IPython Notebook, then you can check it out here. It is a very nice Mathematica-inspired “notebook” interface that allows you to work on bits of code in independent cells. It’s a dream come true for exploratory data analysis.

If you do not already have it, you will also need to install the rpy2 module. If you are using Windows, I recommend using Christoph Gohlke’s binaries. (When using the Gohlke’s site, CTRL+F is your friend.) Once you install rpy2, you will probably need to create an R_HOME system variable, if you do not already have one, and set it to C:\Program Files\R\R-3.0.2, or some other similar location. If you are using Linux, then you probably only have to type sudo pip install rpy2 at the command line.

Once all of that is squared away, you should be able to open an IPython notebook from the terminal using,

ipython notebook --script --pylab=inline

and load the rmagic extension using,

%load_ext rmagic

Data

We will be using the rock data set that comes with R. This data can be loaded and viewed using the commands listed below. I’ve included two lines for printing the resulting image to a PNG which may be omitted in practice.

%%R r <- rock png( 'rock.png', width=500, height=500 ) plot( r ) dev.off()

In the rock data set, twelve core samples were sampled by four cross-sections, making a total of 48 samples. The permeability of each core sample was estimated, in milli-Darcies, and the total area and perimeter of the pores. The shape variable is the total perimeter divided by the square root of the total area of the pores.

The data collection was performed by BP, and the image analysis done by Ronit Katx, of the University of Oxford.

The Linear Model

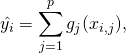

A linear model attempts to describe an output variable in terms of a linear combination of predictor variables. It should be noted that the linearity is with respect to the predictor variables; we could very easily speak of a linear combination of trigonometric functions of the predictor variables. So, we’d like to find a function of the form,

that minimizes the function,

Here, ![]() is the fitted response variable, or the value of the approximating linear function of the predictor variables. This process is readily applied with the following code,

is the fitted response variable, or the value of the approximating linear function of the predictor variables. This process is readily applied with the following code,

%%R lmfit <- lm( perm ~ area + peri, data=rock )

By calling the following, you can see a quick visualization of the residuals, the Q-Q plot, the standardized residuals, and the leverage. We’ll examine these items in greater detail shortly.

%%R png( 'lmfit.png', width=500, height=500 ) par( mfrow=c(2,2) ) plot( lmfit ) dev.off()

Analyzing the Regression

Residuals

The residual is difference between the model and the observed data. If the model fits the data well, then the residual should look like normally distributed noise around a baseline of zero. If the residual, plotted against some observed variable, has any sort of trend or structure, then that is an indication that the model does not fit the data very well. Another thing that one should look for is a more or less constant amount of jitter about zero. If the residuals look like a funnel, tightly clustered about zero at one end, and dispersed widely at another end, then that implies that your error is not constant. Likewise for other shapes, like a butterfly, where the residual has a small spread in the middle and a greater spread at the ends, etc. Anything other than normally distributed noise about a baseline of zero should be a red flag.

The residual of the model is stored in the lm$residuals variable. In order to plot the residual against the predictor variables, area and perimeter, the response variable, the permeability, and the fitted data, we would say,

%%R png( 'residuals.png', width=500, height=500 ) par( mfrow=c(2,2) ) plot( rock$area, lmfit$residuals, xlab="Area", ylab="Residuals" ) plot( rock$peri, lmfit$residuals, xlab="Perimeter", ylab="Residuals" ) plot( rock$perm, lmfit$residuals, xlab="Permeability", ylab="Residuals" ) plot( lmfit$fitted, lmfit$residuals, xlab="Fitted", ylab="Residuals" ) dev.off()

If you know the order in which the data was collected, you may want to look at an observation order versus residual plot and look for trends there. If any are present, you may want to include time as a predictor variable. A positive serial correlation is one in which observations that are close in time are also close in magnitude, and have the same sign. A negative serial correlation is one in which the signs of the residuals alternate. These are more difficult to detect visually, because they tend to look like nice white noise.

Leverage

Leverage values range from zero to one, and describe how much influence an observation has on the model. Observations with greater leverage have a greater effect on the model.

%%R png( 'leverage.png', width=500, height=500 ) leverage = hat( model.matrix(lmfit) ) plot( leverage ) dev.off()

The hat() function in the listing above comes from the hat matrix, or projection matrix, that maps the observed values to the fitted values. The main diagonal of this matrix is the leverage. The hat matrix is given by,

![]()

N.B. Here, the matrix ![]() has a column of ones, representing the constant intersection term. If you just lump a column of ones along with your predictor variables or features, it makes the mathematics and programming nicer.

has a column of ones, representing the constant intersection term. If you just lump a column of ones along with your predictor variables or features, it makes the mathematics and programming nicer.

Studentized Residuals

Recognizing that the standard deviations of residuals vary from point to point, it is helpful to rescale residuals with respect to their leverage. This technique is particularly useful in detecting outliers.

This process is termed studentizing in honor of William Sealey Gosset of t-distribution fame because the scaling uses an estimation of the spread rather than the central tendency, as with normalization.

Studentization in this case is achieved by dividing the residual of a given observation by the product of its sample standard deviation, and the square root of the complement of its leverage,

![]()

Here, ![]() is the studentized residual of residual

is the studentized residual of residual ![]() ,

, ![]() is the sample standard deviation, and

is the sample standard deviation, and ![]() is the leverage–an element on the main diagonal of the hat matrix,

is the leverage–an element on the main diagonal of the hat matrix, ![]() , mentioned above.

, mentioned above.

%%R png( 'studentized.png', width=500, height=500 ) studentized = rstudent( lmfit ) par( mfrow=c(2,2) ) plot( rock$area, studentized, xlab="Area", ylab="Studentized" ) plot( rock$peri, studentized, xlab="Perimeter", ylab="Studentized" ) plot( rock$perm, studentized, xlab="Permeability", ylab="Studentized" ) plot( lmfit$fitted, studentized, xlab="Fitted", ylab="Studentized" ) dev.off()

In the summary graphs produced by plot( rock ) we see the squart root of the absolute value of the standardized residual. This is simply the square root of the absolute value of the studentized residual.

Cook’s Distance

Cook’s distance is another measure of the influence of a given point; it balances the magnitude of the residual against the leverage. It can be used to identify data points that might warrant further investigation, or identify regions of the design space that could use more observations. As a rule of thumb, data points that register a Cook’s distance greater than 1 should receive special consideration.

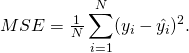

Cook’s distance is calculated using the ratio of the squared residual to the mean squared error (MSE), the leverage, and the number of parameters in the model.

![]()

Here, ![]() is the squared residual,

is the squared residual, ![]() is the leverage,

is the leverage, ![]() is the mean squared error, and

is the mean squared error, and ![]() is the number of parameters in the model. The number of parameters acts like a degrees of freedom term, reducing the influence of the point as the model complexity increases.

is the number of parameters in the model. The number of parameters acts like a degrees of freedom term, reducing the influence of the point as the model complexity increases.

%%R

png('cook.png',width=500,height=500)

cook = cooks.distance( lmfit )

plot( cook, ylab="Cook's Distance" )

dev.off()

Looking at the plot, it looks as though the top three points have particularly high influence. We can retrieve the indices of these points using the which() function from R.

%R which( cook > 0.2 )

An this returns the indices [38,42,48] which may be taken aside for further consideration.

Q-Q Plot

This is simply a scatter plot of the residual data, with a sample of the normal distribution with zero mean and variance equal to that of the residual data. If the Q-Q plot looks like a straight line, then the residual is approximately normal, and the model can be said to have a reasonably good fit. Obviously, there are more rigorous methods to determine whether the residual is normal or not, but this a quick visual check.

The Q-Q plot in this example suggests that the residuals are decidedly not normal, and that they in fact follow a heavy tailed distribution.

%%R

png('qqplot.png',width=500,height=500)

qqnorm( lmfit$residuals )

qqline( lmfit$residuals )

dev.off()

Histogram of the Residual

Another way to assess the residual is to plot its distribution. This brings us to extracting data from the R namespace and transferring it to the Python namespace. This is achieved using the $Rget magic(al) function as follows,

residuals = %Rget lmfit$residuals

hist( residuals, bins=6, color='w', hatch='//' )

title('Distribution of the Residuals')

xlabel('Residual') ; ylabel('Frequency')

savefig('residualsdist.png', fmt='png', dpi=200 )

A set of residuals that are normally distributed about zero should drop off to zero in the tails. This histogram does not display normal behavior because it has a number of very large and very small observations giving it fat-tails. The residuals in this case might have a ![]() or lognormal distribution.

or lognormal distribution.

How much do you enjoy statistics?

I love it! It’s one of my favorite things!

Hi Connor Johnson,

This post is very Interesting, I learned many new things about residual plot by this post.

I also developed a linear Model on some property observations and got a residual plot, but my Q-Q plot doesn’t looks like a linear line, still this model have R-squared value = 1, and it is predicting exact values as I want.

You can take a look of it on following link:

http://community.tableausoftware.com/servlet/JiveServlet/showImage/2-259395-15162/Untitled.png

Can you Please Explain me, why my Q-Q plot is not linear, or something like that. Here my model is working fine.

I am trying to get know why my data and its residual plot showing contradictory insights.

Can You Please Help me in this.

Thanks in Advance.

You might have overfitted your model. Are you performing cross-validation? Is your data posted anywhere? I can look at it if you like.